AWS S3 Sync vs CP: Which one is better for which use cases?

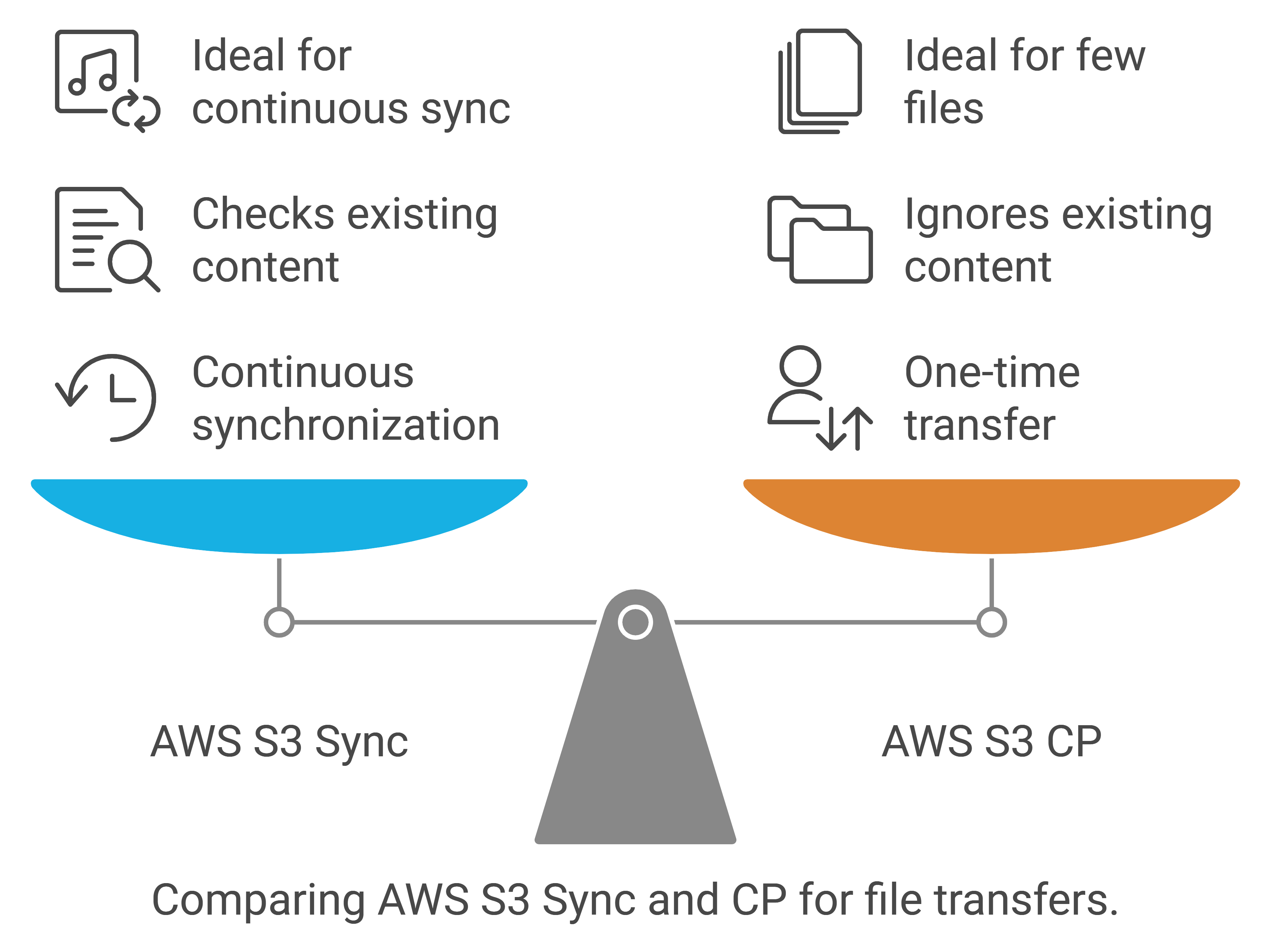

The aws s3 sync and aws s3 cp commands serve different purposes in AWS CLI operations. Here’s what you need to know about each:

AWS S3 Sync

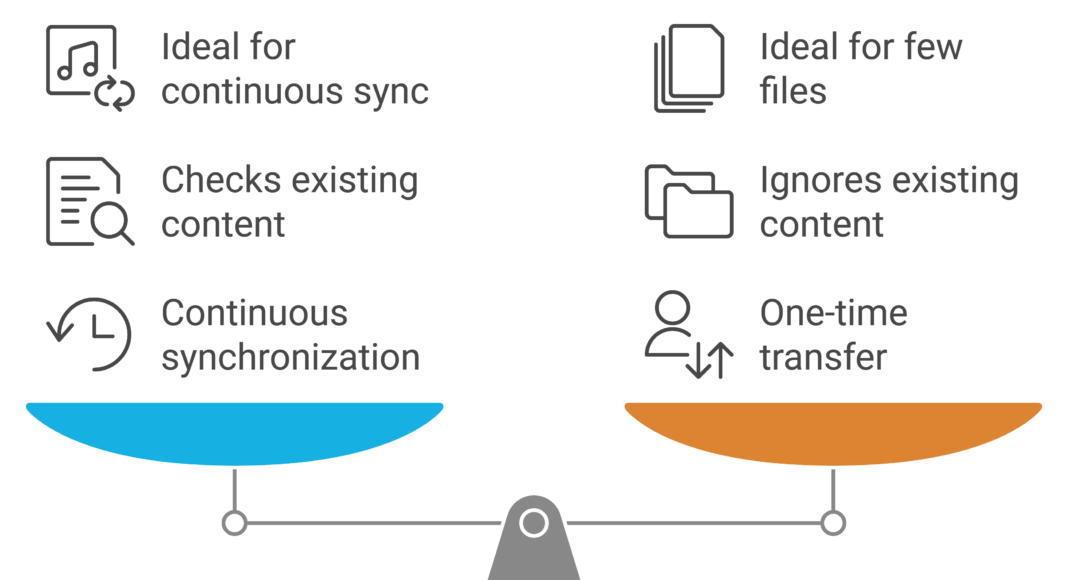

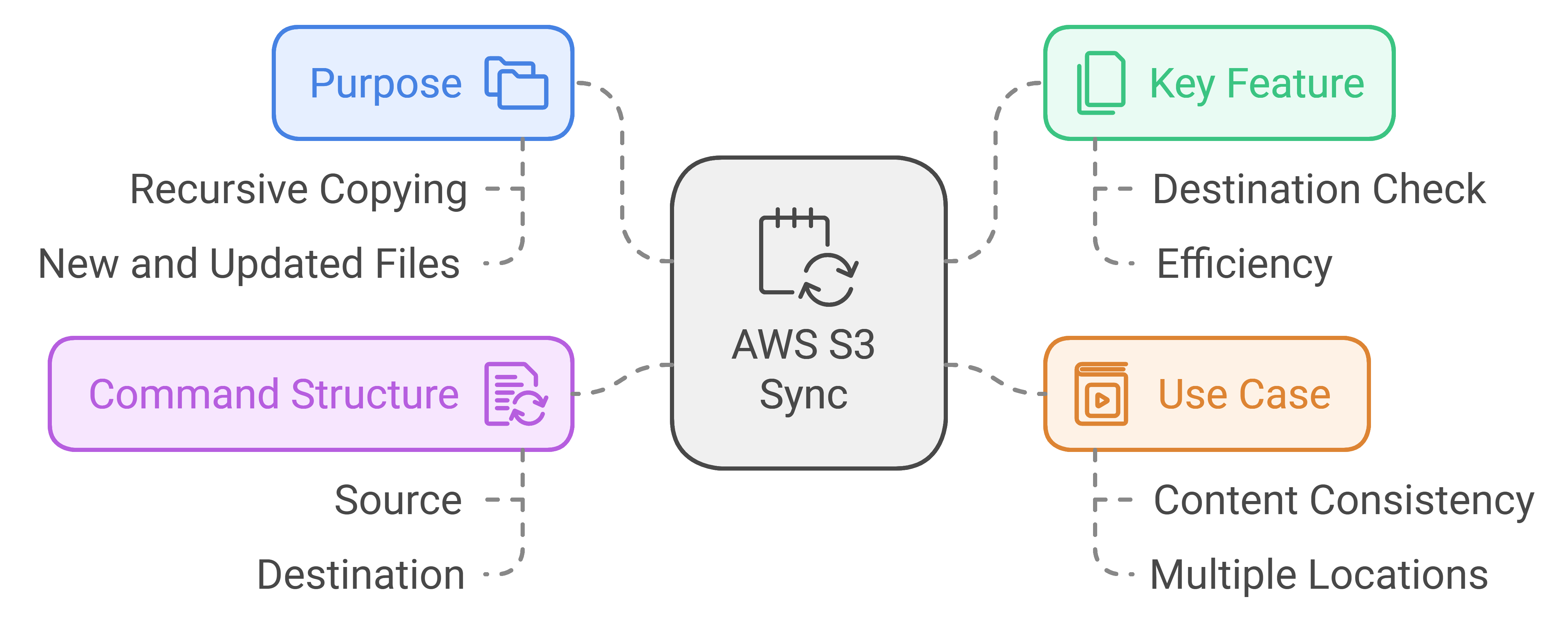

- Purpose: Synchronizing content between directories or S3 buckets involves recursively copying new and updated files from the source directory to the destination.

- Key Feature: Only copies new or modified files, it is not copying all the files from source, it always checks destination!

- Use Case: Maintaining identical content across locations

- Command Structure:

aws s3 sync source destination

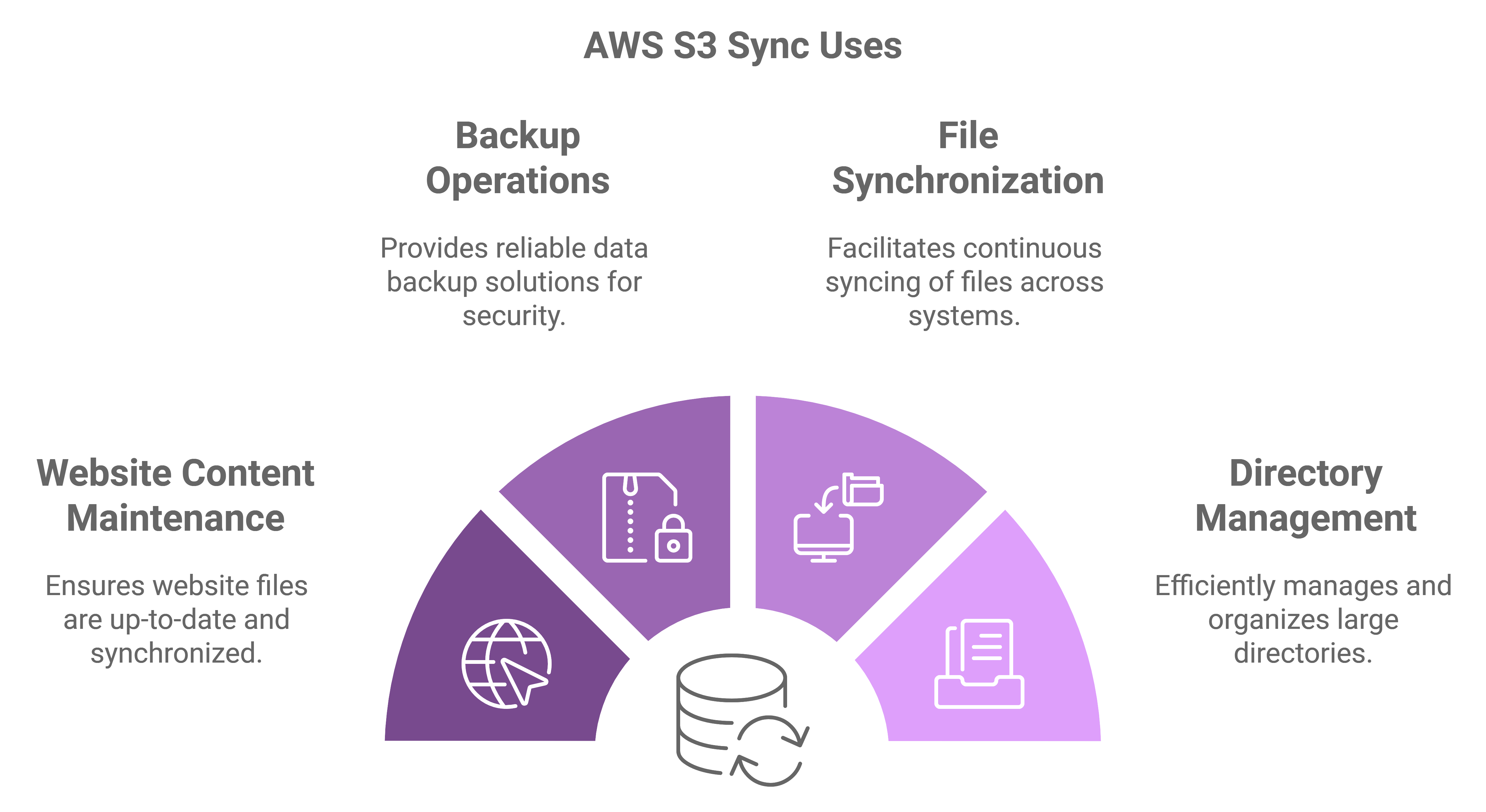

When to Use AWS S3 Sync:

- Maintaining website content

- Backup operations

- Ongoing file synchronization

- Large directory management

AWS S3 Sync Use Case Examples

1.Sync all local objects to the specified bucket

Useful for uploading a large number of local files to S3 Bucket in one command.

cd your-local-directory

aws s3 sync . s3://my-bucket2.Sync all S3 objects from the specified S3 bucket to another bucket

This is handy for keeping two S3 buckets in sync, useful for disaster recovery, data replication, or data migration scenarios.

aws s3 sync s3://source-bucket s3://destination-bucket3.Sync all S3 objects from the specified S3 bucket to the local directory

This is useful for downloading files from S3 to your local machine for analysis, backup, or processing.

aws s3 sync s3://source-bucket .4.Sync all local objects to the specified bucket except “.jpg“ files

This can be useful when you want to upload all files except specific types to S3, like excluding image files for a document storage sync.

aws s3 sync . s3://my-bucket --exclude "*.jpg"5.Sync all local objects to the specified bucket –include “*.txt” –exclude “*”

Useful for finer-grained control over what files get uploaded to S3. It will upload files with txt extension but exclude the rest.

aws s3 sync . s3://my-bucket --include "*.txt" --exclude "*"6.Sync all objects between buckets in different regions

This is necessary when you need to replicate data across regions for redundancy, compliance, or faster access requirements.

aws s3 sync s3://source-bucket s3://destination-bucket --source-region us-west-1 --region us-east-1AWS S3 CP

- Purpose: Copies individual files or entire directories, similar functionality like aws s3 sync but does not take care what exists in the destination or not, blindly copying source to destination even if files are already there in destination folder!!

- Key Feature: Copies everything regardless of existing content

- Use Case: One-time file transfers, better for few files, not good for continuous sync

- Command Structure:

aws s3 cp source destination

When to Use AWS S3 CP:

- Single file uploads

- Complete directory copies

- Version management

- Data migration

AWS S3 Cp Use Case Examples

1.Recursively copying all local objects to the specified bucket

Useful for uploading a large number of local files to S3 Bucket in one command.

cd your-local-directory

aws s3 cp . s3://my-bucket \

--recursive2.Recursively Copying all S3 objects from the specified S3 bucket to another bucket

This is handy for one time copy operations between S3 Buckets, if this command run in a periodic style, it will not check destination, every time will try to copy all the existing objects in source to destination bucket.

aws s3 cp s3://source-bucket/ s3://destination-bucket/ \

--recursive3.Recursively copying all S3 objects from the specified S3 bucket to the local directory

This is useful for downloading files from S3 to your local machine for analysis, backup, or processing.

aws s3 cp s3://source-bucket .\

--recursive4.Recursively copying all local objects to the specified bucket except “.jpg“ files

This can be useful when you want to upload all files except specific types to S3, like excluding image files for a document storage sync.

aws s3 cp your-source-directory s3://my-bucket/ \

--recursive \

--exclude "*.jpg"5.Recursively copying all local objects to the specified bucket –include “*.txt” –exclude “*”

Useful for finer-grained control over what files get uploaded to S3. It will upload files with txt extension but exclude the rest.

aws s3 cp your-source-directory s3://my-bucket/ \

--recursive \

--include "*.txt" \

--exclude "*"6.Sync all objects between buckets in different regions

This is necessary when you need to replicate data across regions for redundancy, compliance, or faster access requirements.

aws s3 cp s3://source-bucket s3://destination-bucket \

--source-region us-west-1 --region us-east-1 \

--recursivePerformance Considerations

- AWS S3 Sync performs checksums to compare files

- CP is faster for one-time transfers, does not compare file changes nor destination existences

- Sync requires more computational overhead, but use less bandwidth since it is not syncing all the files

Best Practices

- Use aws s3 sync for maintaining mirror copies

- Use aws s3 cp for one-time transfers

- Consider using aws s3 sync with

--deletefor exact mirrors - Use aws s3 cp with

--recursivefor directory copies

Reference: AWS CLI Command Reference

If you are dealing with large number of files and using AWS S3 CLI, you can look at our other article to boost and tune CLI settings!